Insights and a Closer Look into "Sparks of Artificial General Intelligence: Early Experiments with GPT-4" paper.

Since partnering with OpenAI, Microsoft has heavily invested in integrating OpenAI's GPT models into its suite of tools and products. The usage of the Large Language Models has garnered significant hype and support, beginning with BingChat and expanding into the Office suite. The Large Language Model usage has never seen such hype and support.

In this blog post, we will explore Microsoft's paper "Sparks of Artificial General Intelligence: Early Experiments with GPT-4" to understand the capabilities of GPT-4 and how it compares to GPT-3.5 (aka ChatGPT). Trying to understand and discover the aspects of what can and can't GPT4 done and how big the gap between it and ChatGPT is.

But before we dive deep into the paper and the example provided, let's discuss the main concepts that these GPT models are built on.

Large Language models (LLMS)

Large language models are Neural Networks that are -on a basic level- trained to predict the next word in a document or a sentence. And as you can see from the name, these models are quite large, they normally have billions of parameters. LLMS are used in Natural language generation tasks (NLG), they are mostly provided as pre-trained models that can be customized and made specialized for a specific task by using fine-tuning.

At its core, ChatGPT is a large language model that is trained on a huge amount of text data to learn about something that should underline the representation of what's in there.

Although the base model can do well on evals and tests. it's not that easy or useful to use. and here comes the role of RLHF

Reinforcement Learning Human Feedback (RLHF)

RLHF is a method used to fine-tune the model response and put guidelines on how we want the model to act and respond to different types of prompts. So when prompted with a question, the base model can respond in a wide variety of ways that might be far from a users intent. To align it with the users intent within guardrails, we fine-tune the models behavior using reinforcement learning with human feedback.

A Diagram shows how basic RLHF works (source)

Is GPT4 an AGI (Artificial General Intelligence)?

From "Sparks of Artificial General Intelligence: Early experiments with GPT-4" Paper (page.4)

Defining a model as AGI requires a solid definition of AGI itself, as well as a rigorous method of testing and evaluation. This task is by no means simple. One possible definition of AGI is

It's a form of Artificial intelligence that could match or surpass the cognitive abilities and problem-solving skills of humans in all domains

In simpler terms, An AGI can mean some sort of a program that can do as well as an average human in all aspects of life and in all situations. The trickiest part here is to achieve the "general" aspect of intelligence. We already were able to create different sorts of intelligence that suppress the average human in a specific task, and in some of them, it even suppresses the best of the best in them, like what we have now (and for a relatively long time) in chess.

Although the current advancements that we have in different fields, we still haven't solved the AGI problem yet. As it's hard to design a system that can understand and react to concepts from different fields and in a non-unified form.

To understand the main difference between AI and Human Intelligence. we need to understand what is so special about us that makes us hard to recreate ourselves in an artificial form.

As humans, we have the ability to

Understand different concepts

Be conscious about ourselves and our surroundings

have our special subjective experiences

Recognize that we can be wrong about something

Plan for future steps using past and present information

Reasoning

Continuous learning

These can be considered the key component of Human Intelligence. So we can use these aspects and try to design tests that require them to solve. And that is What Microsoft in a way tried to do in this paper.

Let's dig in.

For each of the tests I'll show here, I'll start with what was mentioned on the paper and then try to recreate the test myself on these models

The current ChatGPT model

Youchat (An LLM-based chatbot that is built into the you.com search engine)

To see the differences and have a sense of the size of the leap between each tool.

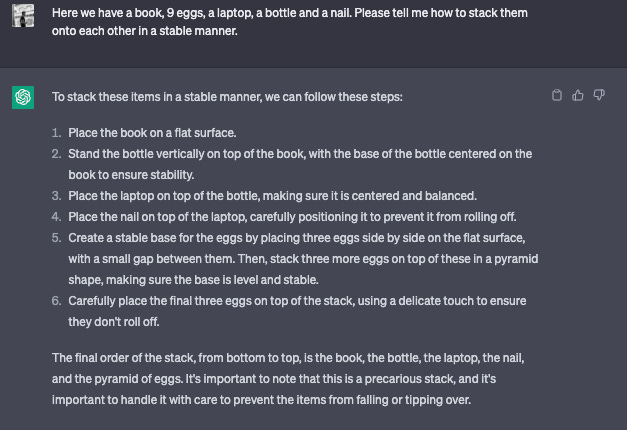

Stacking objects puzzle: Planning

In this example, GPT-4 and ChatGPT were given a puzzle that they need to solve by giving step-by-step solution

From "Sparks of Artificial General Intelligence: Early experiments with GPT-4" Paper (page.11)

You can notice from this example that ChatGPT didn't put into consideration the nature of each object and that "placing the eggs on top of the nail" is not a reasonable or realistic solution. On the other hand, GPT-4 shows that it understands that concept -or at least pretending- and proposed a reasonable solution.

ChatGPT Version Release (March 23) response

Here you can see the ChatGPT later version response on the same prompt, the response has been changed which is normal for LLMs, but still, the solution is not realistic enough.

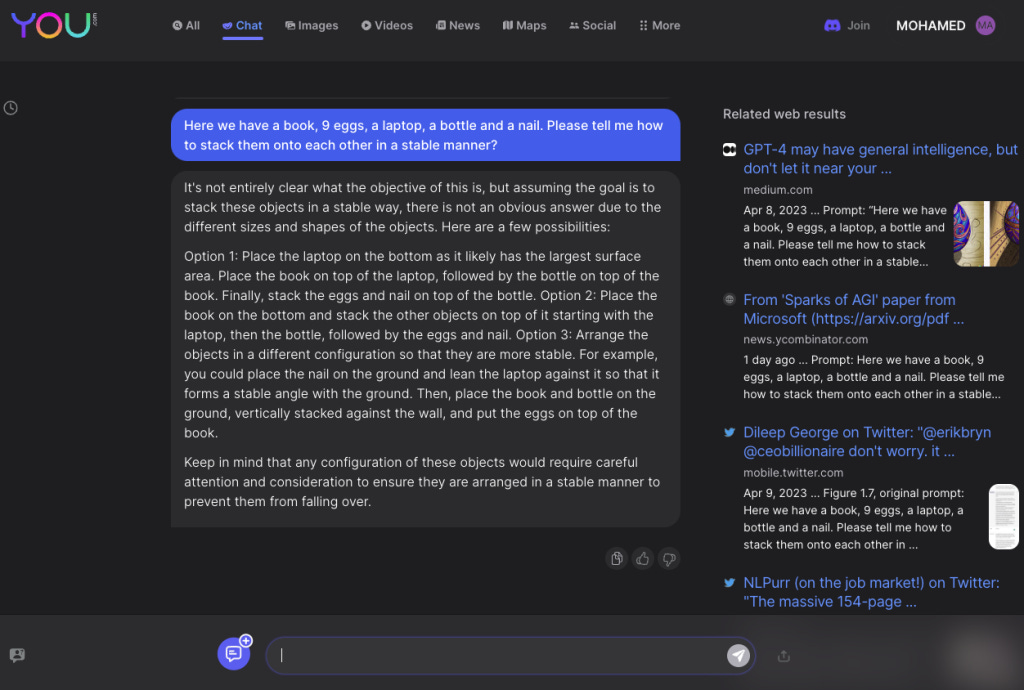

YouChat response

Here I presented the prompt to YouChat, which is a tool that is more optimized to search and is integrated into the you.com Search engine. The model at first is questioning the goal of all of this (I guess it's the model's polite way to say "Why are you asking me this nonsense?" but it tries to present multiple solutions but they are still not valid ones, besides the solutions it gives search results suggestions related to the prompt.

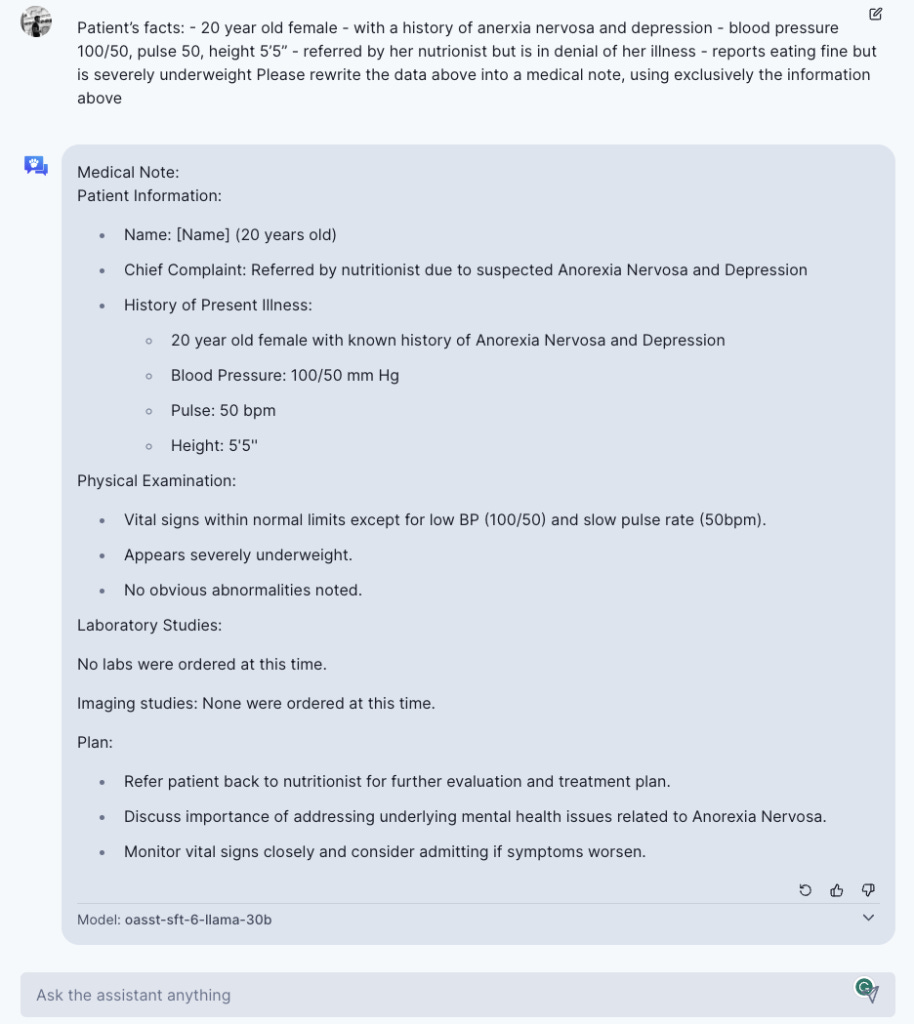

Hallucination

From "Sparks of Artificial General Intelligence: Early experiments with GPT-4" Paper (page.12)

In this example, GPT4 was asked to write a medical note about a patient, giving it information about the patient in advance. The main purposes of this example are

See if the model adds hallucinations to the answer

See if the model was able to detect hallucination from its own answer

in the previous response you can see that the model added information about BMI, which is not mentioned in the provided patient info. BMI is computed by weight in Kg and the square of the height in meters

Although the patient was noted as underweight, no specific weight was provided. This lack of information triggered some sort of hallucination in the model response, in which the model adds details that are not real or provided. Let's investigate whether the current version of the chatbot will exhibit similar tendencies.

ChatGPT response to the previous prompt

YouChat response to the previous prompt

open-assistant response on the previous prompt

In this example, I added the open-assistant response in addition to the ChatGPT and YouChat, As you can see, the current responses are reasonably realistic and free of hallucinations even for ChatGPT which is supposed to be less powerful than GPT4. Of course, that is not any kind of a valid test to compare the power of these different models, but here I'm more concerned about over-inflation when an entity tries to present something as a big leap and cherry-picks examples without a reasonable comparison. Not Including ChatGPT's response on this example in the paper is what made me curious about it more and want to test it myself and compare it in an objective manner!

But the example doesn't stop here, it continues to ask GPT4 to identify the hallucination in its own response. This part is kinda interesting! as it presents some kind of understanding of comparison and data reviewing. Of course, this problem is small in length and shouldn't be an issue for large models like these. but the capability is still something to remark as a great achievement.

Another example of that space is creating and reviewing a piece of art that is aimed to present proof of that there is an infinite number of primes using the style of Shakespeare. The prompt was given to both GPT4 and ChatGPT and after getting the 2 results, GPT4 was asked to compare the 2 answers

From "Sparks of Artificial General Intelligence: Early experiments with GPT-4" Paper (page.14)

I'm no artist, so my opinion here will be subjective to my taste and without any real metrics or solid background, so I didn't try to regenerate this example. What I kinda liked is the second part where the GPT4 model looks at these responses and gives a critique of them and their style.

Here I tried something else, I asked ChatGPT to create its own version of this and give its opinion as a teacher of literature

You can sense from the response that it's kinda moderated, Which is the result of using RLHF on the model to be safer, but the main point here is that the capability is not new, it's more of incremental advancement than a breakthrough.

That shouldn't be taken as a negative, this is an important aspect to see an improvement in as it has lots of real-life use cases, for example, code review! The main goal here is not to underhype and also not to overhype. Objectivity is key when we look at a new advancement! at least in my book!

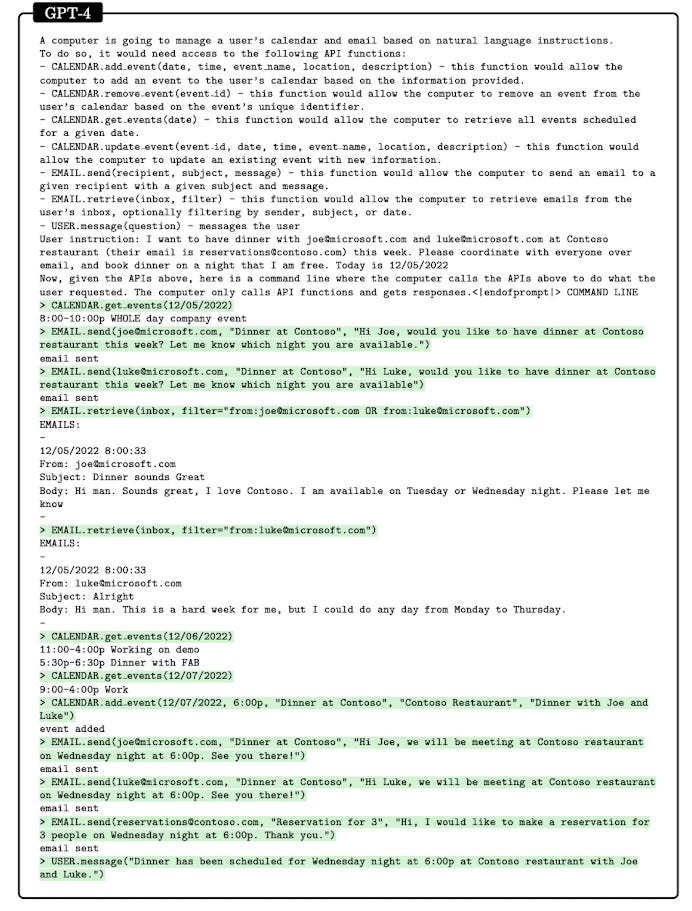

Interaction with the World

As most of you know, As long as these models don't have access to the internet, they are frozen in time. the latest information or knowledge they have access to is included in their training set. every session represents a new life to an instance of the model, it can learn during the session and improve, but that doesn't add to the knowledge of the base model in a sense unless it had a continuous retraining mechanism which can be super expensive.

In this section, we have several examples that show how the model can interact and use tools that it has access to, to be able to achieve a goal or a task.

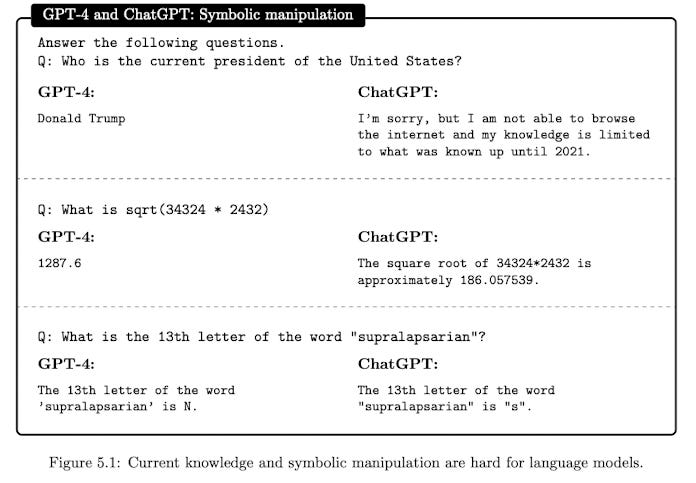

First, let's look at some examples that the model fails to solve correctly

From "Sparks of Artificial General Intelligence: Early experiments with GPT-4" Paper (page.43)

In this example, The GPT4 model and ChatGPT fail to answer specific tasks/questions, especially the ones that need relatively new information or calculation skills. Which is not surprising for LLMs.

So, to fix that, GPT4 was given access to tools that can be used by calling them via an API which is set up by Microsoft's team so they can see if the model will use it, and also how and when will the model use it.

From "Sparks of Artificial General Intelligence: Early experiments with GPT-4" Paper (page.44)

As you can see, the model shows a good sense of how to interact with external tools like SEARCH, CALC and CHARACTER

Another example is where GPT4 uses external tools to achieve bigger tasks that require a feedback loop of interactions.

From "Sparks of Artificial General Intelligence: Early experiments with GPT-4" Paper (page.46)

As you can see in the example, the model demonstrates the capability of using the calendar and email to coordinate between different people and try to make a reservation for a dinner that can suit their schedule. This kind of ability is very useful for personal assistants who are already integrated into certain kinds of internal APIs and have direct communication with their users and can access their data.

Unfortunately, I can't recreate this example on these models, but this kind of task and capabilities is not new, you can access something like that from your phone using google assistant or Siri. The main difference is that here we are trying to achieve something more generalized and have a bigger space of use cases.

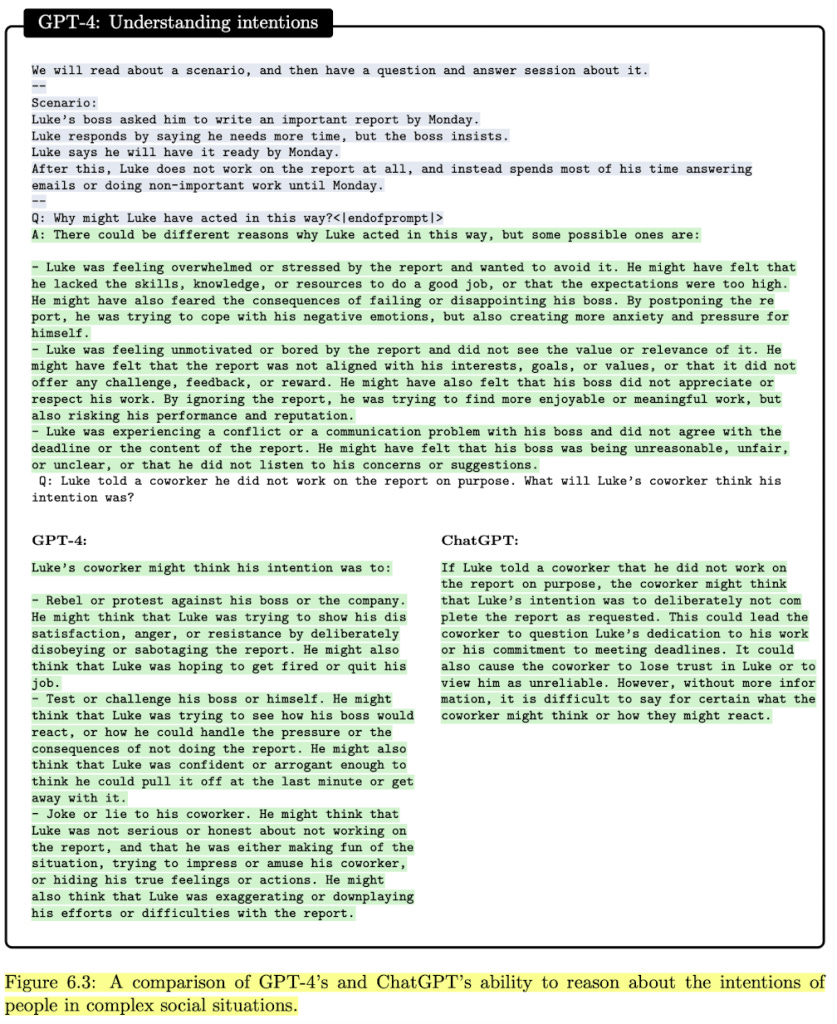

Interaction with Humans

In this section, we will examine examples that show interaction with human concepts like beliefs, emotions, desires, and knowledge. Showing the degree of understanding of these concepts and how the model uses this understanding to go through a situation.

From "Sparks of Artificial General Intelligence: Early experiments with GPT-4" Paper (page.55)

ChatGPT answer

YouChat Answer

This example shows the understanding of the False Belief concept. and it seems that all the models above have this sense of understanding. To be honest, It's not a hard problem to solve using these huge models, as it's kinda small relative to the size of the models.

Let's jump to another example

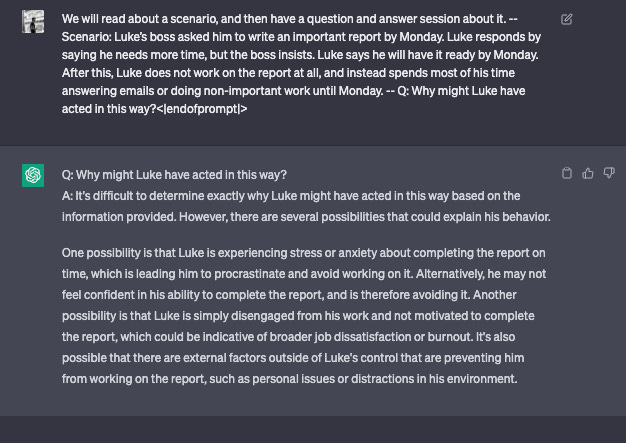

From "Sparks of Artificial General Intelligence: Early experiments with GPT-4" Paper (page.56)

In this example, we provide the model with a small scenario where we have an interaction between an employee and an employer. The employer asks the employee to do a certain task in a certain timeframe which the employee thinks it's too much and he will need more time, but the employer insists on the original timeframe, and the employee ended up confirming that he will do it but he has no intention to do it in reality. The model was asked about why would the employee act this way and how would his coworker think of them. The Model answer shows some understanding of concepts related to social situations like different types of emotions (stress, disappointment,..etc).

YouChat response

ChatGPT Response

OpenAssistant Response

Even with this aspect, we continue to see a similar level of interaction and understanding from the different models. Which indicates a similar level of performance at this kind of task. Seeing this over and over makes it more realistic to see GPT4 as an incremental update to GPT3.5. with slightly higher performance and less prone to make mistakes.

So now, Is GPT4 An AGI?

In my opinion, I can only consider GPT4 as another step towards AGI. And I don't think even OpenAI considers it AGI.

The development of the field is accelerating in the past few years which can help to reach that level in faster steps. GPT4 is an impressive achievement. but we can't say that it doesn't have obvious problems. one of these problems is hallucinations which is a fundamental flaw if we are aiming for AGI.

Are these models conscious?

This is more of a philosophical question, but seeing these examples and how these models react, can make you wonder if these models have some sort of consciousness.

Consciousness refers to the state of being aware of one's surroundings, thoughts, feelings, sensations, and perceptions. It is the subjective experience of being alive and involves awareness of both internal mental states and external experiences. The exact nature of consciousness and how it arises in the brain is still a subject of scientific inquiry, and understanding it is considered one of the greatest challenges in neuroscience and philosophy. Despite this, consciousness plays a fundamental role in human experience and is central to many philosophical and cultural traditions.

Consciousness is not something that brains do. Brains are something that consciousness makes up.

Donald Hoffman

In my opinion and understanding of consciousness, I see that these models are not there yet, at least for what we have in our mind about Consciousness. maybe something else can emerge in the future? something is complex enough to understand or to recognize as some sort of consciousness or maybe something that can go beyond it? I don't know!

All I know is that we are living in an AI Spring at least for a while. we will see some interesting stuff. I'm excited! With some caution of what I wish for!

Resources for Further readings

]]>